Human Machine Interaction (HMI) has been an important field of research for decades, but even Modern HMI applications do not feel as natural as Human-Human communication[1] J. M. Hoc, “From human-machine interaction to human-machine cooperation,” Ergonomics, vol. 43, no. 7, pp. 833–843, 2000.. Even with human-like voice and gesture recognition rates, a large share of information get lost along the way[2]W. Xiong, J. Droppo, X. Huang, F. Seide, M. Seltzer, A. Stolcke, D. Yu, and G. Zweig, “Achieving human parity in conversational speech recognition.” [Online]. Available: http://arxiv.org/pdf/1610.05256v2.

The Buxton model describes the HMI in two categories, the foreground, and the background interaction. The foreground interaction is defined as an explicit interaction with the attention on the interface. In contrast, the background interaction does not require the user’s attention and the actions take place on the behalf of the user[3] B. Buxton, “Integrating the periphery & context: A new model of telematics,” in Proceedings of Graphics Interface ’95, 1995, pp. 239–246.[4] K. Hinckley, J. Pierce, E. Horvitz, and M. Sinclair, “Foreground and background interaction with sensor-enhanced mobile devices,” ACM Transactions on Computer-Human Interaction, vol. 12, no. 1, pp. 31–52, 2005.. Most applications only operate in the foreground, so they only react when the operator explicitly communicates with the machine. Comparing this with Human-Human communication, the key to a good understanding of each other does not lie in the foreground. It is more about the implicit transferred information in the background, like body language and context[5]A. Schmidt, “Implicit human-computer interaction through context,”

Personal Technologies, vol. 4, no. 2-3, pp. 191–199, 2000.. For machines to relate explicit communicated information to a certain context, including physical environment and human activity, they first need to gather the situation and interpret it with a defined context model[6]A. Jaimes and N. Sebe, “Multimodal human–computer interaction: A survey,” Computer Vision and Image Understanding, vol. 108, no. 1-2, pp. 116–134, 2007.. Integrating context sensitivity in applications like the Smart Workbench (SWoB) at the Regensburg Robotics Research Unit (RRRU) can amplify its supportive possibilities without the need for explicit communication. The SWoB is a collaborative human-robot workplace that supports the worker on semi-automated tasks[7]S. Niedersteiner, C. Pohlt, and T. Schlegl, “Smart workbench: A multimodal and bidirectional assistance system for industrial application,”in IECON 2015 – 41st Annual Conference of the IEEE Industrial Electronics Society. IEEE, 2015, pp. 002 938–002 943.. One of the key features that are going to be implemented in the SWoB is the ability to interpret the whole scenario including the state of the robot, different components and also the actual state of the operator. The latter is one of the most important parts of the whole scenario. The recognition of the conducted actions gives the operator the ability to communicate with the machine only by its implicit movements without the focus on any communication interfaces.

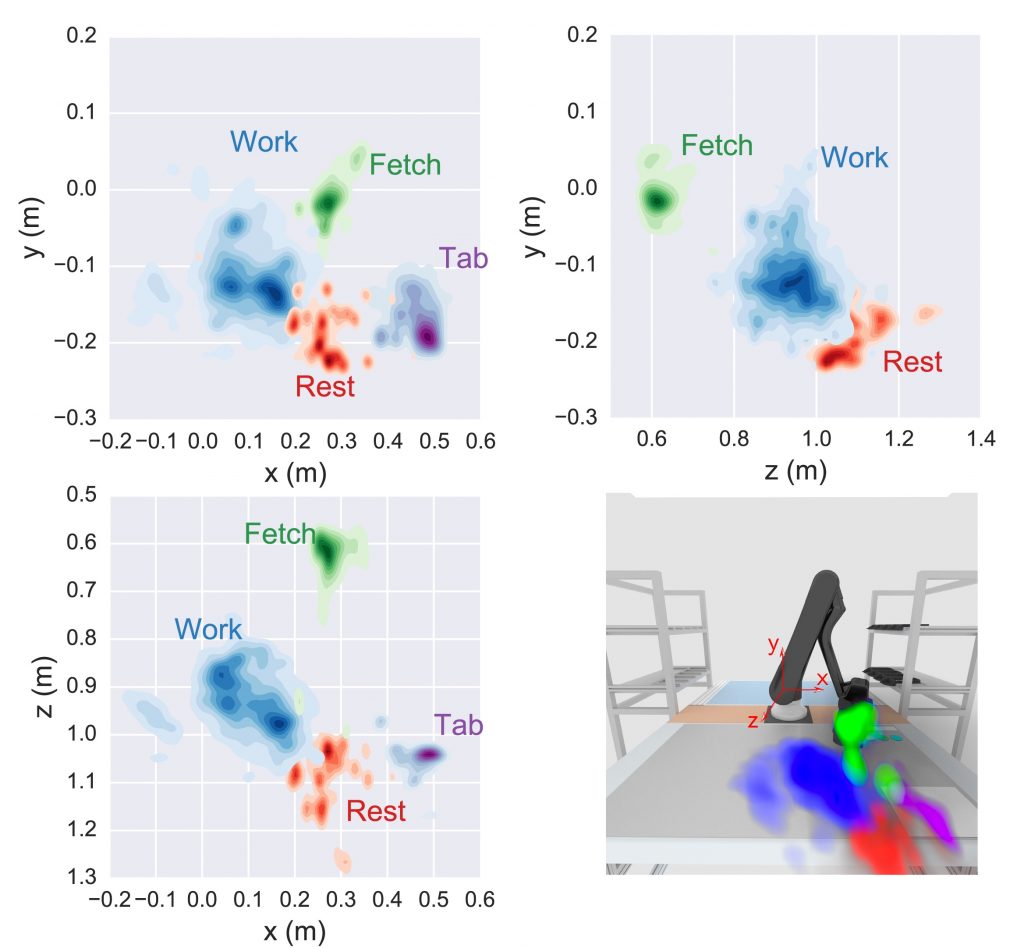

The goal of the project is to develop a method to classify implicit human inputs in a semi-structured production HMI scenario. Therefore, the operator’s actions were investigated with an experimental assemble process which incorporates the actions rest, work, fetch and tab. This experiment is conducted by 14 test persons and recorded with the Kinect skeletal tracking toolbox. The so derived populational data has been evaluated to show the correlation between actions and high-density spatial hand distributions.

To validate the correlation the data of four test persons have been labeled and visualized. It could be shown that structured tasks like tab and fetch which are bound to the position of a physical object have a low standard deviation and can easily be distinguished from the other actions. For semi-structured actions like rest and work, however, the standard deviation is higher. This is due to the fact that these actions are not bound to a specific location and the area they are carried out is subconsciously chosen by the test person. But even with the wider distribution, the areas are mostly separated. The developed classification algorithm is based on these findings. The algorithm uses the test data to create models for each action and hand. The models are evaluated in real time during the assembly process and the so gained classified samples for the unknown user are appended to the personalized dataset. The models are updated with the accumulated data and therefore give a better estimation of the action distribution for the previously unknown user.

For future research, the classification algorithm needs to be further tested. Values for the thresholds need to be estimated and false input data needs to be handled. Also, the feature space could be expanded to get a more precise classification of the actions. Here, the velocity of the movements could be a good additional indicator. With the expanded feature space, different machine learning approaches can be implemented and tested which would make the threshold based classification unnecessary. The so derived method could then be used to classify a complete series of actions and evaluate if tasks, that consist of multiple actions, are done in the right order. Implemented in the SWoB this application could be the key feature to track the work progress and simultaneously the accuracy of the carried out tasks.

Tools: Python 2.7(SciPy, scikit-learn, Pandas, PyZMQ, Seaborn ), Matlab, Blender

Jupyter Notebook documentation

References

| ↑1 | J. M. Hoc, “From human-machine interaction to human-machine cooperation,” Ergonomics, vol. 43, no. 7, pp. 833–843, 2000. |

|---|---|

| ↑2 | W. Xiong, J. Droppo, X. Huang, F. Seide, M. Seltzer, A. Stolcke, D. Yu, and G. Zweig, “Achieving human parity in conversational speech recognition.” [Online]. Available: http://arxiv.org/pdf/1610.05256v2 |

| ↑3 | B. Buxton, “Integrating the periphery & context: A new model of telematics,” in Proceedings of Graphics Interface ’95, 1995, pp. 239–246. |

| ↑4 | K. Hinckley, J. Pierce, E. Horvitz, and M. Sinclair, “Foreground and background interaction with sensor-enhanced mobile devices,” ACM Transactions on Computer-Human Interaction, vol. 12, no. 1, pp. 31–52, 2005. |

| ↑5 | A. Schmidt, “Implicit human-computer interaction through context,” Personal Technologies, vol. 4, no. 2-3, pp. 191–199, 2000. |

| ↑6 | A. Jaimes and N. Sebe, “Multimodal human–computer interaction: A survey,” Computer Vision and Image Understanding, vol. 108, no. 1-2, pp. 116–134, 2007. |

| ↑7 | S. Niedersteiner, C. Pohlt, and T. Schlegl, “Smart workbench: A multimodal and bidirectional assistance system for industrial application,”in IECON 2015 – 41st Annual Conference of the IEEE Industrial Electronics Society. IEEE, 2015, pp. 002 938–002 943. |